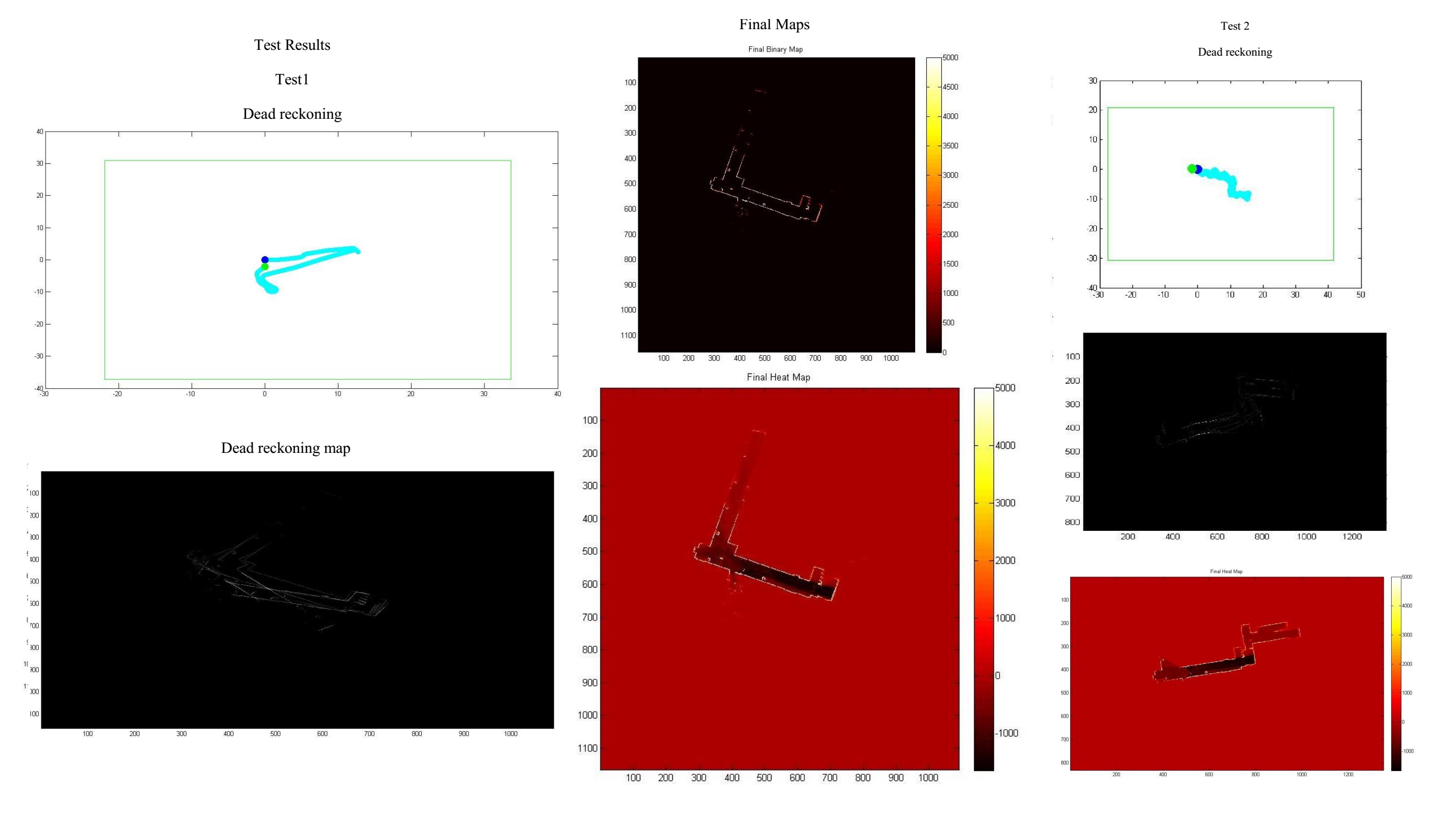

Simultaneous Localization and Mapping with Particle Filters Posted on August, 25th, 2014

Problem Statement

The objective of the project is to use data from the magic robot (a

mobile robot), with odometers on each of the 4 wheels, a LIDAR,

gyroscope (3 axes), accelerometer (3 axes) and a kinect sensor to

localize the robot and simultaneously map the environment by

implementing the Particle Filters SLAM algorithm.

Description of approach

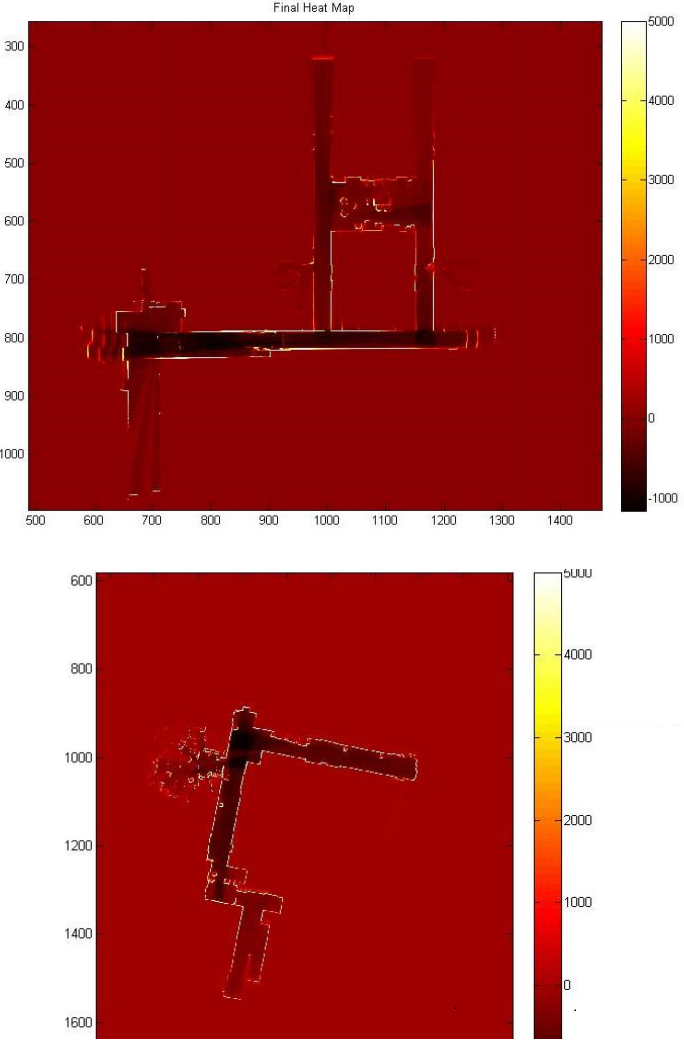

I removed the bias and multiplied the sensitivities to the IMU data (accelerometer and gyroscope) after smoothing. Then I ran Unscented Kalman Filter to get the yaw, pitch and roll of the robot at every time instant. This data is only used to remove the LIDAR ray points hitting the ceiling or the floor when the robot is on a ramp. I first do dead reckoning using only odometery and then get an approximate map size. I use 100 particles in my particle filter. I perform motion based on the odometery and then randomly jitter the particles along x, y and the orientation phi with a Gaussian centered at 0 and a standard deviation of 3 times the change in x, y and phi. Then I compute weight as the sum of the map values at the hit positions of the LIDAR rays where the map value is the log odd value of it being an obstacle versus an empty space. I choose the particle with the largest weight and update the map with the LIDAR points. I increment the log odds of the obstacles by 30, I use Bresenham’s algorithm to decrement the points which are empty by only 1. Finally I resample every time based on the weights of the particles.