Learning in Robotics Projects Posted on August, 25th, 2014

Learning in Robotics was a PhD level course, one of the most

draining and difficult courses at Penn. But it also gives you deep

insight into the algorithms in machine learning. It is a completely

project based course with 6 projects. We learnt about the state of

the art techniques in vision and machine learning used for the

various problems in robotics. It is the course where I had to use

everything from machine learning, computer vision, DSP, control

systems to linear algebra for solving the problems.

All the problems used datasets collected at Penn. Some of the data

was deliberately made noisy to make the problems harder. No sample

code or code framework was provided. The course involved lectures

with reading assignments of papers which were to be used to

implement the projects in Matlab or any programming language. All

the algorithms had to be implemented from the ground up.

Projects

Color segmentation with gaussians

Object detection using color segmentation by modelling the

colors using gaussians. Detecting the depth of the object

using shape properties.

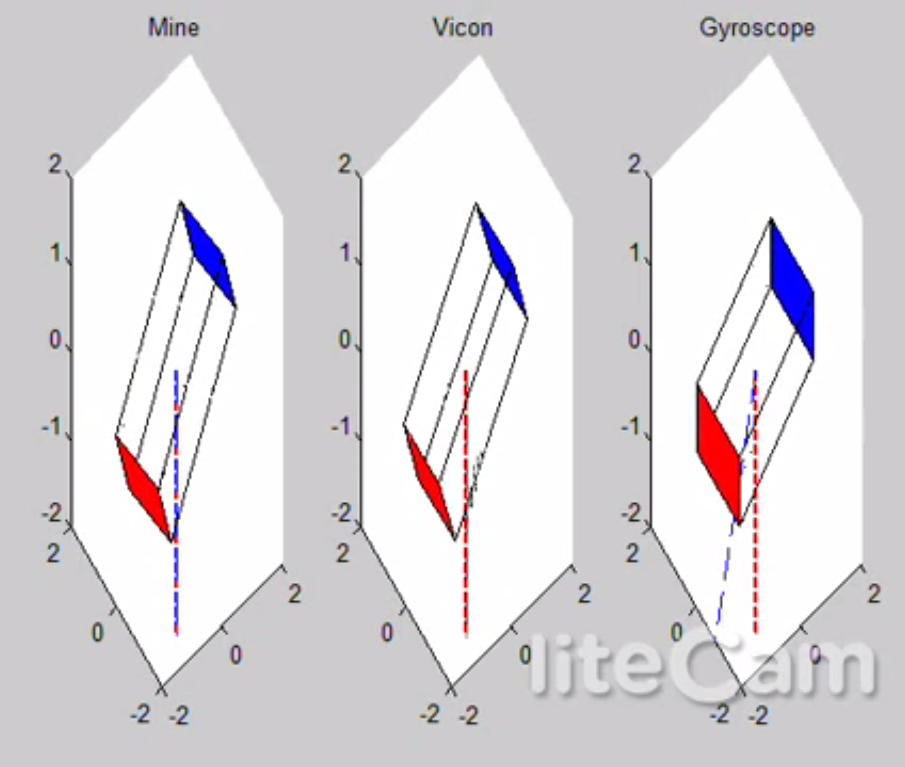

3D orientation tracking with UKF

Use the IMU signals with a 3 axis gyroscope and a 3 axis accelerometer to obtain a robust estimate of the orientation of an object rotated in 3D space by implementing Unscented Kalman Filters. Use the orientation to stitch a panoramic image of the photos taken by a camera mounted on the object.

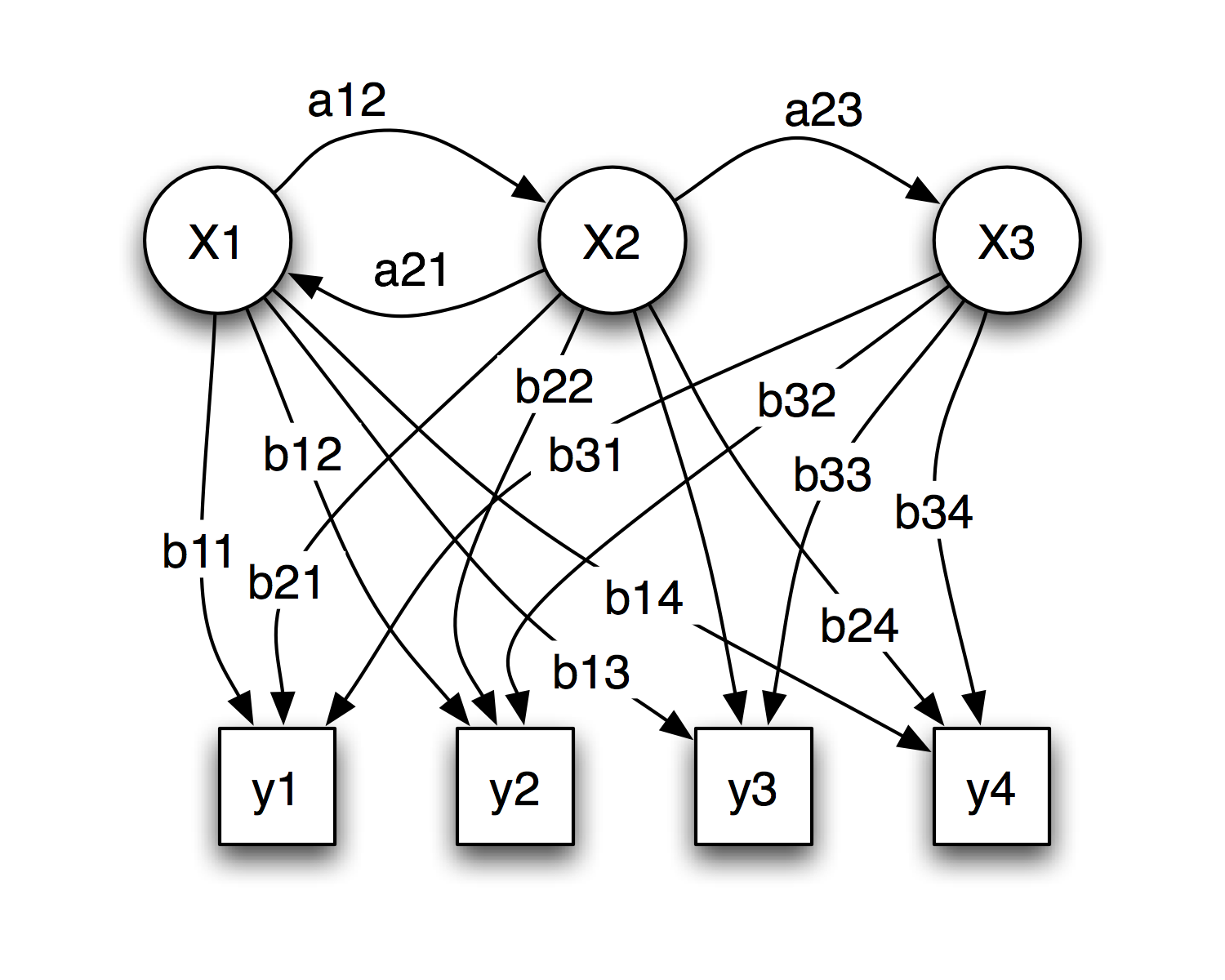

HMM for Gesture Recognition

Implement Hidden Markov Models to detect the gesture

performed using a phone IMU. The IMU has a 3 axis

gyroscope and a 3 axis accelerometer. Use clustering for

vector quantization of the IMU data.

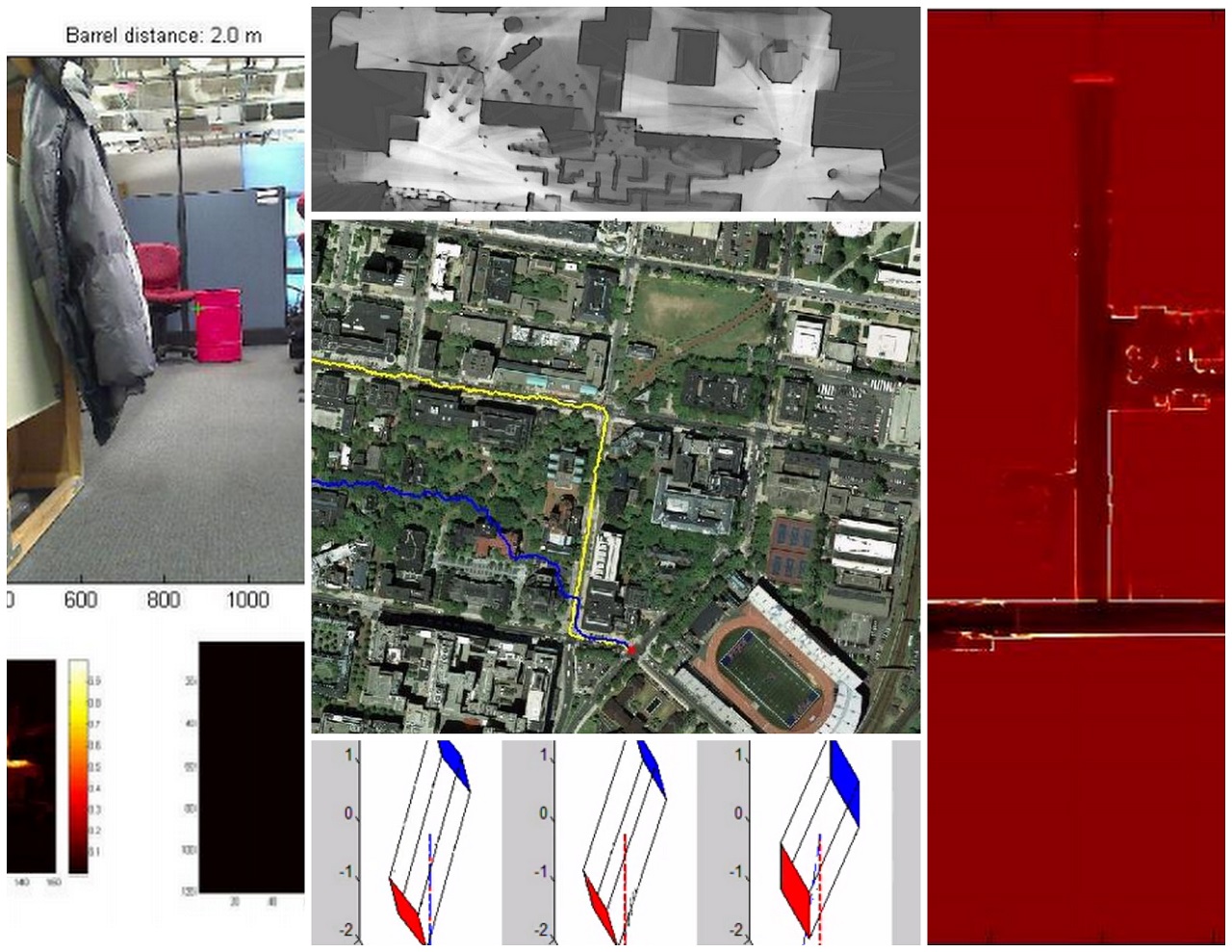

SLAM with Particle Filters

Use data from a mobile robot, with odometers on each of

the 4 wheels, a LIDAR, gyroscope (3 axes), and an

accelerometer (3 axes) to localize the robot and

simultaneously map the environment (SLAM) using Particle

Filters (PF).

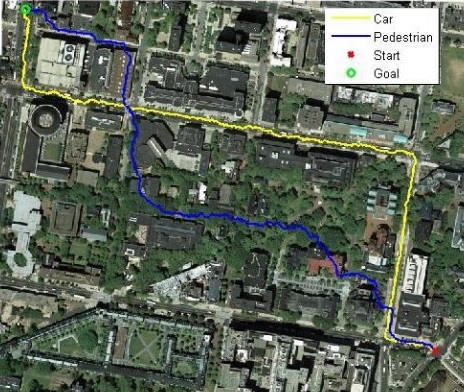

Imitation Learning for path planning

Use the aerial map image from Google maps of the Penn

Campus and to learn a model for predicting the optimal

path which will be taken by a car and a pedestrian by

using imitation learning (a type of reinforcement

learning) by employing the Learch Algorithm, to produce

behavior similar to an expert policy.

Multi-robot Graph-SLAM

Use data obtained from five robots exploring different regions of the same environment with overlap. Robots have a vertical and a horizontal lidar sensor, an IMU and a GPS tracking system. Each robot runs a local SLAM algorithm. Use loop-closures for map merging by implementing Bundle Adjustment or GraphSLAM algorithm.